Principles of Congestion Control

- Packet Loss is typically resulted from the overflowing of router buffers as the network becomes congested.

- To treat the cause of network congestion, mechanisms are needed to throttle senders in the face of network congestion.

Scenario 1

- Let's assume that the router has an infinite amount of buffer space.

- The left graph plots the per-connection throughput; the number of bytes per second at the receiver.

- Even if the sending rate is above R/2, the throughput is only R/2.

- The link simply cannot deliver packets to a receiver at a steady state that exceeds R/2.

- Acheiving per-connection throughput of R/2 might actually appear to be a good thing, because the link is fully utilized in delivering packets to their destinations.

- The right graph plots the consequence of operating near link capacity.

- As the sending rate exceeds R/2, the average number of queued packets in the router is unbounded, and the average delay between source and destination becomes infinite.

- We've found one cost of a congested network - large queueing delays are experienced as the packet arrival rate nears the link capacity.

Scenario 2

- Now let's modify the scenario in two ways.

- First, the amount of router buffering is assumed to be finite.

- Packets will be dropped when arriving to an already-full buffer.

- Next, we assume that each connection is reliable.

- If a transport-level segment is dropped at the router, the sender will eventually retransmit it.

- Therefore, we must now be more careful with our use of the term sending rate; LAMBDA_IN' is sometimes referred to as the offered load to the network.

- Let's assume that the sender retransmits only when a packet is known for certain to be lost.

- At the figure above, when the offered load equals R/2, the rate at which data are delivered to the receiver application is smaller than R/3.

- We see here another cost of a congested network - sender must perform retransmissions in order to compensate for dropped packets due to buffer overflow.

- Lastly, let's assume that the sender may time out prematurely and retransmit a packet that has been delayed in the queue but not yet lost. In this case, both original packet and retransmission may reach the receiver.

- The work done by the router in forwarding the retransmitted copy of the original packet was wasted, as the receiver will have already received the original copy of this packet.

- Here then is yet another cost of a congested network - unneeded retransmissions by the sender in the face of large delays may cause a router to use its link bandwidth to forward unneeded copies of a packet.

Scenario 3

- In the final scenario, let's consider that multiple hosts transmit packets, each over overlapping paths among routers.

- When a packet is dropped along a path, the transmission capacity that was used at each of the upstream links to forward that packet to the poinbt at which it is dropped ends up having been wasted.

TCP Congestion Control

- TCP must use end-to-end congestion control rather than network-assisted congestion control, since the IP layer provides no explicit feedback to the end systems regarding network congestion.

- The approach taken by TCP is to have each sender limit the rate at which it sends traffic into its connection as a function of perceived network congestion.

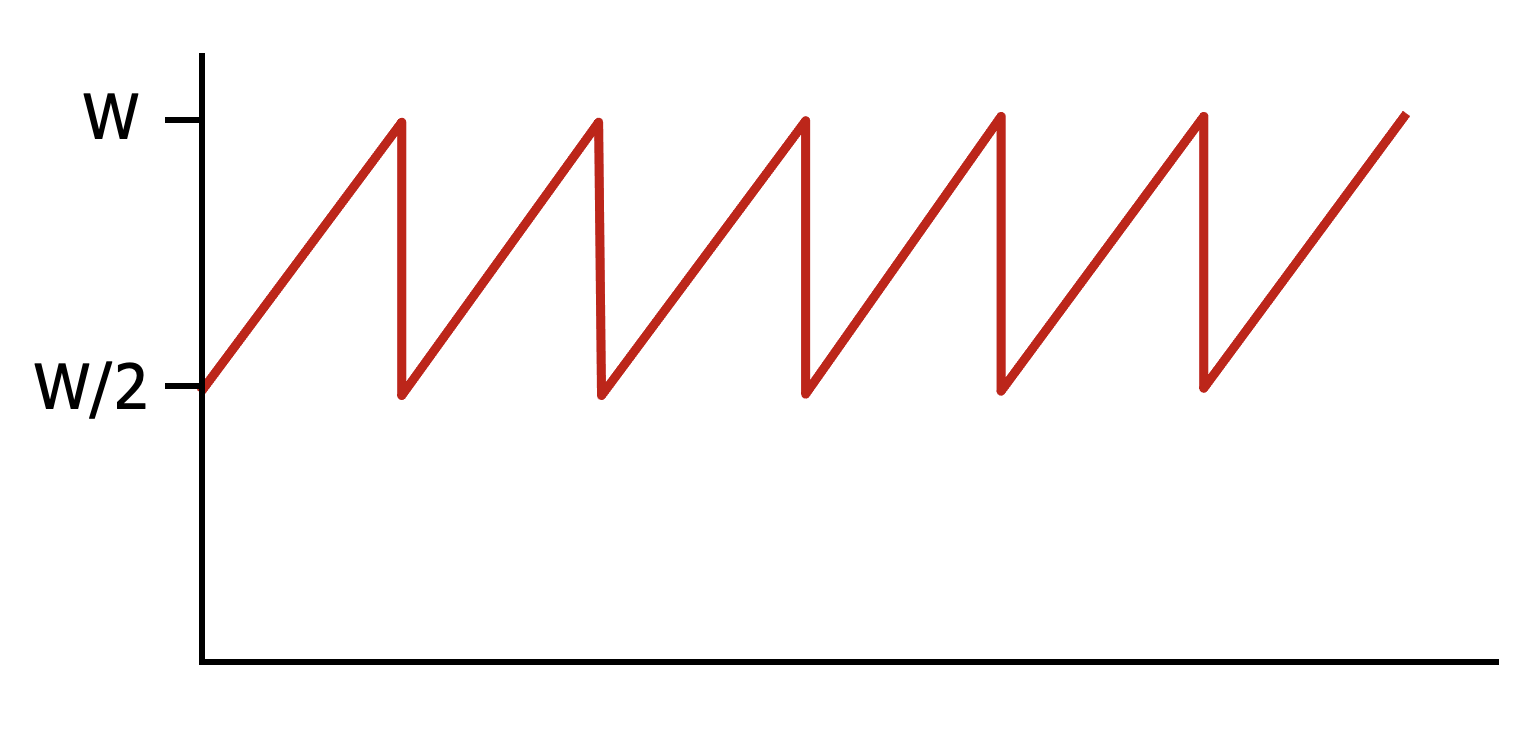

- TCP adopts additive increase and multiplicative decrease for Congestion Control.

- The TCP congestion control mechanism operating at the sender keeps track of an additional variable, congestion window(cwnd).

- cwnd is dynamic, function of perceived network congestion.

- We define a loss event at a TCP sender as the occurrence of either a timeout or the receipt of three duplicate ACKs from the receiver.

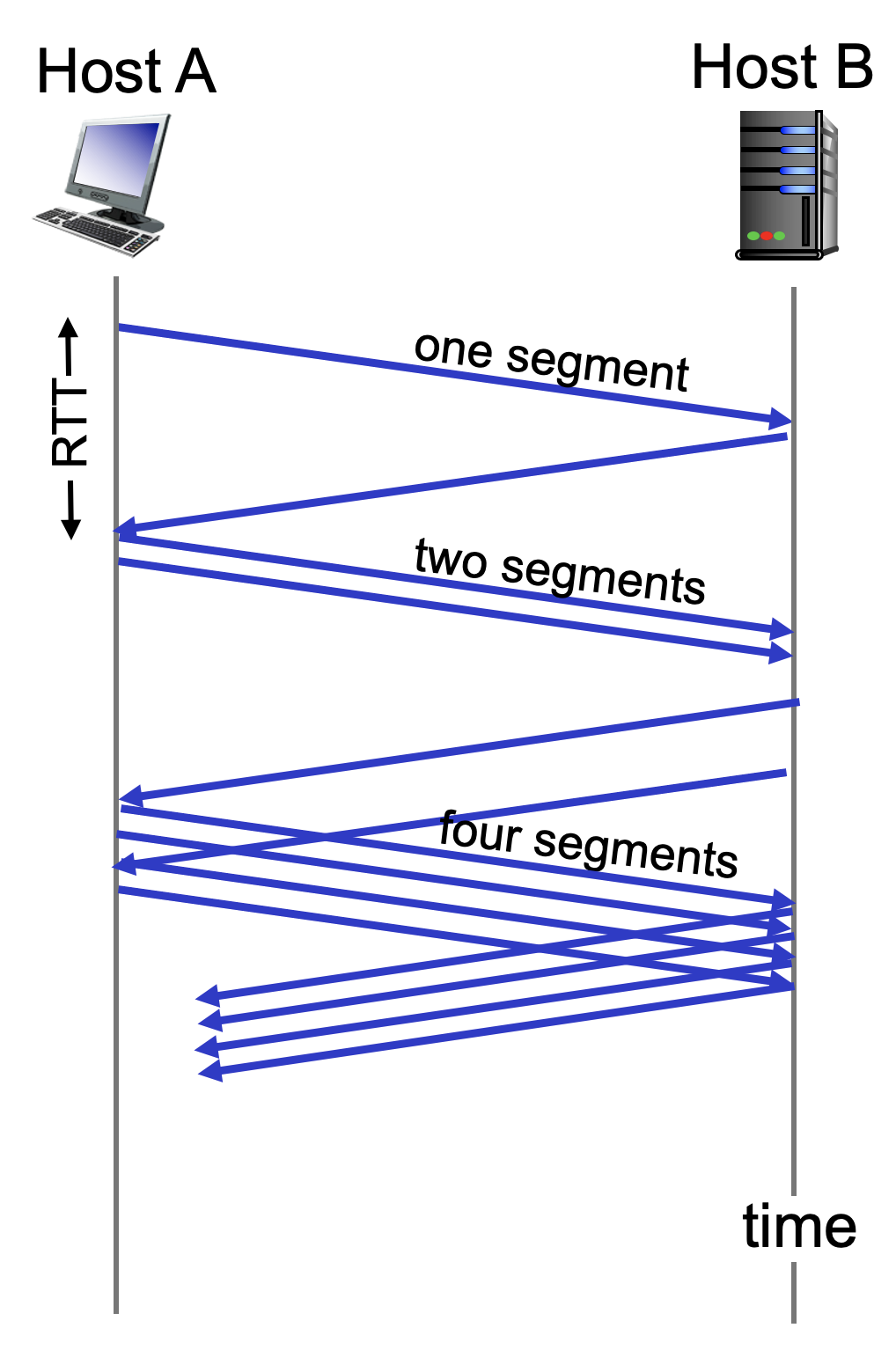

- Also, TCP will take the arrival of acknowledgments as an indication that all is well, and increase its congestion window size.

- Because TCP uses acknowledgements to trigger its increase in congestion window size, TCP is said to be self-clocking.

- When connection begins, TCP increase rate exponentially until first loss event occurs.

- This way, initial rate is slow, but ramps up exponentially fast.

- When loss event occurs, cwnd is cut in half, then window grows linearly.

- Setting a threshold that the sender estimates will start to occur loss event, the sender could exponentially increase before theshold, and convert to additive increase after the threshold.

- When loss event is occured by 3 duplicate ACK, TCP cuts the window into half, and then increase linearly.

- When loss event is occured by timeout, set cwnd to 1 and grow exponentially. This is fast recovery.

- ssthresh is the point where Slow Start is changed to Congestion Avoidance mode.

- ssthresh is set to 1/2 of its value just before timeout.

- TCP sending rate is how much packets are sent in a certain amount of time. It is a indication of how good your TCP protocol works in terms of Congestion Control.

TCP Throughput

- The average TCP throughput as function of window size RTT is 3/4 * (W / RTT) when W is window size.

- The problem with TCP throughput is that when TCP has long, fat pipes, it could not utilize the best throughput.

TCP Fairness

- The goal of TCP is if K TCP sessions share the same bottleneck link of bandwidth R, each should have average rate of R/K.

- UDP acts as a bully in this perspective, therefore it is restricted internally.

- Another problem is that application could open multiple parallel connections between two hosts.

'Network' 카테고리의 다른 글

| Network Layer (2/4) : Data Plane (0) | 2021.10.31 |

|---|---|

| Network Layer (1/4) - Data Plane (0) | 2021.10.31 |

| Transport Layer (2/3) (0) | 2021.10.11 |

| Transport Layer (1/3) (0) | 2021.10.11 |

| Application Layer (3/3) (0) | 2021.10.11 |